Day 10 : System Design Secrets: How Big Tech Picks Winners

Welcome back, Congratulations, my friend! 🎉 You’ve built an ultra-fast caching system, optimized queries, and even convinced the founder that not every single data point needs to be real-time. Life is good… until while developing the caching system you noticed a very important issue about your app.

The Problem

We know that in our app, our cache stores three types of entries:

- Most popular T-shirts

- Most recently added T-shirts

- Most recently viewed T-shirts by users

The first two entries are shared across all users, meaning they won’t create memory issues. However, the third type — tracking the most recently viewed T-shirts by individual users — can be problematic.

As the number of users grows, the required cache entries will also increase, potentially causing the cache size to become extremely large. For instance, if there are 1 million users, that translates to 1 million entries, each containing arrays of IDs. This is highly inefficient.

Can We Reduce the Cache Size?

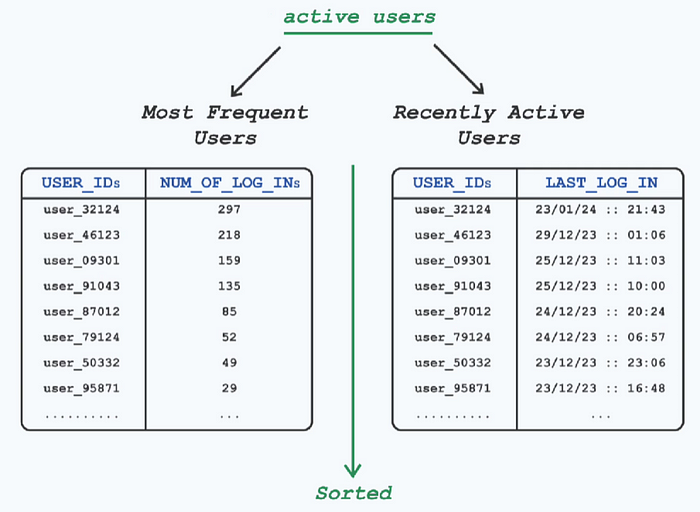

Absolutely. One logical approach is to store cache entries only for active users.

This is where it gets a lot interesting.

But how do we define an active user? There are two major ways to determine this:

- Frequent Users: Users who log in frequently and consistently use the platform.

- Recently Active Users: Users who visited the platform in the last few days (e.g., yesterday or today). These users are more likely to revisit the products they recently viewed.

Thus, if we need to limit cache storage, we can store only the most active users by sorting them based on:

- Login frequency (number of times they’ve logged in)

- Last login time

Once sorted, we can retain the top 10% of users or store only the top 1,000 user entries in the cache. Any additional entries beyond this limit will be removed, ensuring a fixed cache size of 1,000 entries, which significantly reduces memory usage.

Handling New Users in Cache

Now, what happens when a new user logs in and the cache already contains 1,000 entries? Which entry should be removed to accommodate the new user?

The answer lies in one of the most underrated but powerful tricks in system design: cache eviction policies and access patterns.

🎩 The Algorithm’s Magic Trick: Who Stays, Who Goes?

Eviction Policies

There are two commonly used eviction policies for managing cache size:

1. Least Recently Used (LRU)

If the goal is to prioritize recent activity, the Least Recently Used (LRU) policy is applied. In this approach:

- The oldest entry (i.e., the user with the earliest login time) is removed from the cache.

- The new user is added in its place.

This process is called eviction, and it ensures that the cache always holds the most relevant and recently active users.

Used by Twitter’s “For You” feed, Instagram Reels, and some YouTube recommendations

🔹 Prioritizes content that has been interacted with recently

🔹 Older content disappears even if it has millions of views

🔹 Newer content gets a chance to shine

Example 👉 Scenario:

We have a cache of size 3, and we receive the following access sequence of page requests:A → B → C → A → D

Step-by-Step Execution:

- A arrives → Cache:

[A] - B arrives → Cache:

[A, B] - C arrives → Cache:

[A, B, C](Cache is now full) - A is accessed again → Cache:

[B, C, A](A moves to the most recently used position) - D arrives (Cache full, needs eviction)

- LRU removes the least recently used page → “B” (since it wasn’t accessed recently)

- Cache:

[C, A, D]

Key Takeaway:

- LRU removes the least recently used entry when the cache is full.

- It ensures that recently used elements stay in the cache.

2. Least Frequently Used (LFU)

Alternatively, we can use the Least Frequently Used (LFU) policy. This method prioritizes users based on their historical activity:

- The cache keeps track of how often each user has logged in.

- When a new user logs in, their login count is initially low.

- If the top cached users have significantly higher login counts, the new user may not be added to the cache.

However, LFU has a drawback — it delays the caching of new but highly active users. This is why social media platforms often favor LRU for recent activity, ensuring that fresh and relevant content is prioritized.

Used by Netflix, YouTube, Instagram Explore, and TikTok

🔹 Prioritizes content based on how many times it has been watched/interacted with

🔹 Older, high-engagement content stays

🔹 New content struggles to break in

Example 👉 Scenario:

We have a cache of size 3, and we receive the following access sequence:A → B → C → A → D → B → A

Step-by-Step Execution:

- A arrives → Cache:

[A (1)] - B arrives → Cache:

[A (1), B (1)] - C arrives → Cache:

[A (1), B (1), C (1)](Cache is full) - A is accessed again → Cache:

[A (2), B (1), C (1)](A’s frequency increases) - D arrives (Cache full, needs eviction)

- LFU removes the least frequently used item → “B” or “C” (both have frequency 1).

- Assume B is removed → Cache:

[A (2), C (1), D (1)]

6. B is accessed again → Cache: [A (2), C (1), D (1)] (Since B is no longer in cache, it is re-added and evicts C) → Cache: [A (2), D (1), B (1)]

7. A is accessed again → Cache: [A (3), D (1), B (1)]

Key Takeaway:

- LFU removes the least frequently used element.

- It prioritizes elements that are accessed more often over time.

A Final note :

- Eviction Policy: Determines which entries should be removed from the cache when it reaches its limit.

- LRU vs. LFU:

- LRU: Focuses on recent activity, ensuring newly active users are prioritized.

- LFU: Retains users with a long history of engagement but may delay caching new users.

3. Cache Performance Factors:

- Eviction Policies

- Write Policies

- Consistency

- Placement & Data Distribution

By optimizing caching strategies with these factors in mind, engineers can design a highly efficient caching system.

🏆 How You Can Hack the Algorithm

Now that you know the system design game, here’s how you win:

📌 Want to rank on Google? Make sure your content stays fresh (LRU wins).

📌 Want to go viral on YouTube? Get high retention (LFU keeps your video alive).

📌 Want your tweets to blow up? Engage within the first hour (Twitter’s LRU).

Big Tech isn’t magic. It’s just smart caching

📢 Stay tuned for Day 11! 👀 See Yaa in the next blog.